The Science of Psychoacoustics: Unraveling the Fascinating World of Sound Perception

Posted: April 27th, 2024, 4:43 pm

Have you ever wondered how our brains interpret the sounds we hear? How do we distinguish between different voices, instruments, or even emotions conveyed through sound? The answer lies in the fascinating field of psychoacoustics – the study of the psychological and physiological responses to sound. In this introduction post, we'll dive into the captivating world of psychoacoustics and explore how it shapes our auditory experiences.

What is Psychoacoustics?

Psychoacoustics is an interdisciplinary field that combines elements of psychology, acoustics, electronic engineering, physics, biology, physiology, and computer science. It focuses on understanding how the human brain perceives, processes, and interprets sound. By studying the relationship between the physical properties of sound and the subjective experiences they evoke, psychoacoustics aims to unravel the mysteries of auditory perception.

Psychophysics and Psychoacoustics

Psychoacoustics is a branch of psychophysics, which quantitatively investigates the relationship between physical stimuli and the sensations and perceptions they produce. In the context of psychoacoustics, this involves studying how the human auditory system perceives various sounds, including noise, speech, and music. By manipulating the physical properties of sound stimuli and measuring the corresponding psychological responses, psychoacoustics seeks to establish quantitative relationships between the two.

Psychophysical methods, such as threshold detection, magnitude estimation, and discrimination tasks, are commonly employed in psychoacoustic research. These methods allow researchers to quantify the limits of auditory perception, determine the just-noticeable differences between sounds, and investigate the subjective scales of perceptual attributes like loudness and pitch. By applying rigorous experimental designs and statistical analyses, psychoacoustics aims to develop mathematical models and theories that accurately describe the relationship between physical sound stimuli and their corresponding perceptual experiences.

Here is a highly recommended crash course in psychoacoustics, from the peripheral auditory system and masking to sound quality:

The Physiology of Hearing

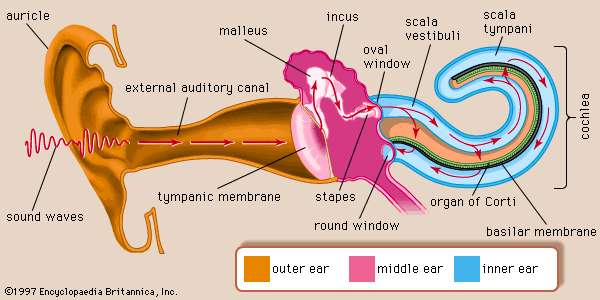

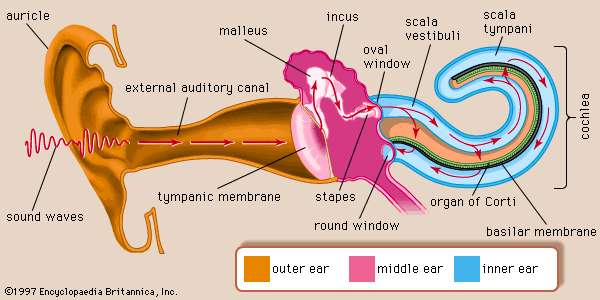

To grasp the foundations of psychoacoustics, it's essential to understand the physiology of hearing. When sound waves enter our ears, they travel through the auditory canal and cause the eardrum to vibrate. These vibrations are then transmitted to the middle ear, where three tiny bones – the malleus, incus, and stapes – amplify and transmit the vibrations to the inner ear.

The inner ear, specifically the cochlea, plays a crucial role in the perception of sound. The cochlea is a fluid-filled, spiral-shaped structure lined with thousands of tiny hair cells. These hair cells are arranged in a frequency-specific manner, with high-frequency sounds stimulating hair cells at the base of the cochlea and low-frequency sounds stimulating hair cells at the apex. This tonotopic organization allows the brain to differentiate between different frequencies and perceive pitch.

As sound vibrations travel through the cochlear fluid, they cause the hair cells to bend and generate electrical signals. These signals are then transmitted to the auditory nerve, which carries the information to the auditory cortex in the brain for further processing and interpretation. The brain's complex neural networks analyze the incoming auditory information, extracting relevant features such as pitch, timbre, and spatial location, and integrating them into a coherent perceptual experience.

Pitch Perception

One of the fundamental aspects of psychoacoustics is pitch perception. Pitch is the subjective sensation of a sound's frequency, allowing us to distinguish between high and low tones. The brain's ability to perceive pitch is remarkably precise, enabling us to detect subtle variations in frequency. However, the perception of pitch is not solely dependent on the physical frequency of a sound but also influenced by factors such as loudness, duration, and the presence of harmonics.

The perception of pitch is closely tied to the concept of critical bands. Critical bands refer to the frequency ranges within which the ear's frequency-analyzing mechanism operates. The human auditory system divides the frequency spectrum into approximately 24 critical bands, each spanning about one-third of an octave. Within each critical band, the ear integrates the energy of the sound, and this integration influences pitch perception. Sounds with frequencies falling within the same critical band are more likely to be perceived as having similar pitches.

Pitch perception also involves the phenomenon of virtual pitch, where the brain perceives a pitch that is not physically present in the sound stimulus. This occurs when the brain extracts the fundamental frequency from a complex sound containing harmonics. Even if the fundamental frequency is missing, the brain can still perceive it based on the pattern of the harmonics. This explains why we can hear the pitch of a telephone dial tone or a bass guitar, even though the fundamental frequency may be too low to be directly perceived.

Timbre and Sound Quality

Timbre, often described as the "color" or "texture" of sound, is another fascinating aspect of psychoacoustics. It refers to the unique character of a sound that distinguishes it from others with the same pitch and loudness. Timbre is what allows us to differentiate between different musical instruments, voices, or even emotions conveyed through speech. The perception of timbre is influenced by the harmonics, attack, decay, and other spectral and temporal characteristics of a sound.

The perception of timbre is closely related to the concept of formants. Formants are the resonant frequencies of a sound source, such as the human vocal tract or a musical instrument. These resonances shape the overall spectrum of the sound and contribute to its unique timbre. The relative strengths and positions of formants play a significant role in the perception of vowel sounds in speech and the characteristic timbres of different musical instruments.

Timbre perception is a complex process that involves the brain's analysis of the spectral and temporal features of a sound. The brain extracts information about the relative amplitudes and phases of the harmonics, as well as the attack, sustain, and decay characteristics of the sound. It then integrates this information to form a coherent perception of timbre. Interestingly, the perception of timbre can be influenced by factors such as the listener's cultural background, musical training, and emotional state.

Loudness and Dynamic Range

Loudness is another crucial element in psychoacoustics, referring to the subjective perception of a sound's intensity. Our brains have an incredible ability to process a wide range of loudness levels, from the faintest whisper to the roar of a jet engine. This is known as the dynamic range of hearing. However, the perception of loudness is not linear; a doubling of sound intensity does not necessarily result in a doubling of perceived loudness. Instead, the relationship between physical intensity and perceived loudness follows a logarithmic scale, known as the decibel scale.

The perception of loudness is influenced by various factors, including frequency, duration, and the presence of other sounds. The human auditory system is most sensitive to frequencies in the range of 2-5 kHz, which corresponds to the frequency range of human speech. Sounds in this frequency range are perceived as louder compared to sounds of equal intensity at other frequencies. Additionally, the duration of a sound affects its perceived loudness; sounds with longer durations are generally perceived as louder than shorter sounds of the same intensity.

Loudness perception is also subject to the phenomenon of loudness adaptation. When exposed to a constant sound for an extended period, the perceived loudness gradually decreases over time. This is why a loud noise that initially seems unbearable can become more tolerable after prolonged exposure. Conversely, the absence of sound can lead to an increased sensitivity to subsequent sounds, a phenomenon known as auditory deprivation.

Masking and Auditory Scene Analysis

Psychoacoustics also delves into the phenomenon of masking, which occurs when one sound obscures or "masks" another. This is particularly relevant in noisy environments where background sounds can interfere with our ability to perceive specific sounds of interest. Masking can occur in both the frequency and time domains. Frequency masking happens when a sound in one frequency range makes it difficult to perceive a sound in a nearby frequency range. Temporal masking occurs when a loud sound makes it challenging to perceive a softer sound that occurs shortly before or after it.

The human auditory system has evolved to cope with masking effects through a process called auditory scene analysis. This refers to the brain's ability to separate and analyze complex auditory scenes, allowing us to focus on a particular sound source while filtering out irrelevant background noise. Auditory scene analysis involves grouping sound elements that belong to the same source and segregating them from other sources. This process relies on various cues, such as spatial location, pitch, timbre, and temporal patterns, to organize the auditory input into meaningful perceptual units.

One of the most well-known examples of auditory scene analysis is the "cocktail party effect." In a noisy social gathering, the brain can selectively attend to a particular conversation while filtering out the competing background chatter. This is achieved through a combination of spatial, spectral, and temporal cues that allow the brain to separate the target speech from the interfering noise. Researchers have studied the cocktail party effect extensively to understand the mechanisms underlying auditory scene analysis and to develop algorithms for speech separation and enhancement in challenging acoustic environments.

Spatial Hearing and Sound Localization

Spatial hearing, or the ability to localize sound sources in three-dimensional space, is another important aspect of psychoacoustics. The human auditory system uses a variety of cues to determine the location of a sound source, including interaural time differences (ITDs), interaural level differences (ILDs), and spectral cues.

ITDs refer to the differences in the arrival time of a sound at the two ears. Since sound travels at a finite speed, a sound originating from the side will reach the closer ear slightly earlier than the farther ear. The brain uses these tiny time differences, on the order of microseconds, to estimate the horizontal position of the sound source. ILDs, on the other hand, refer to the differences in the intensity or loudness of a sound at the two ears. A sound coming from the side will be louder in the closer ear due to the acoustic shadowing effect of the head. The brain combines ITD and ILD cues to determine the azimuth (horizontal angle) of a sound source.

Spectral cues, which arise from the interaction of sound waves with the outer ear (pinna) and the head, provide information about the elevation and front-back location of a sound source. As sound waves enter the ear canal, they are filtered and modified by the complex geometry of the pinna. These spectral modifications vary depending on the direction of the sound source and serve as cues for vertical localization. Additionally, the brain uses head movements to resolve front-back ambiguities and improve localization accuracy.

The ability to localize sounds is crucial for navigating and interacting with the environment. It allows us to orient ourselves towards sound sources of interest, avoid potential dangers, and engage in effective communication. Spatial hearing also plays a significant role in the perception of music and the creation of immersive audio experiences. Techniques such as binaural recording and 3D audio simulation leverage the principles of spatial hearing to create realistic and engaging soundscapes.

Ultrasonic Hearing and the Hypersonic Effect

One of the most intriguing aspects of psychoacoustics is ultrasonic hearing and the hypersonic effect. Ultrasonic hearing refers to the human ability to perceive sounds at frequencies higher than the typical upper limit of human hearing, which is around 20 kHz. While it may seem counterintuitive, research has shown that humans can indeed detect and respond to ultrasonic frequencies under certain conditions.

The mechanism behind ultrasonic hearing is still a topic of debate among researchers. One theory suggests that ultrasonic sounds stimulate the inner hair cells located at the base of the cochlea, which are sensitive to high-frequency sounds. Another theory proposes that ultrasonic signals resonate within the brain and are then modulated down to frequencies that the cochlea can detect.

Fascinating studies have demonstrated the potential of ultrasonic hearing. For example, by modulating speech signals onto an ultrasonic carrier, researchers have achieved intelligible speech perception with remarkable clarity, particularly in environments with high ambient noise. The hypersonic effect, a term coined by researchers Tsutomu Oohashi and colleagues, refers to the controversial idea that the presence or absence of ultrasonic frequencies in music can influence human physiological and psychological responses, even when these frequencies are not consciously perceived. Their study found that subjects showed enhanced alpha-wave brain activity and expressed a preference for music containing high-frequency components above 25 kHz, despite not being able to consciously distinguish between the presence or absence of these frequencies.

Applications of Psychoacoustics

The principles of psychoacoustics find numerous applications in various fields, ranging from music and audio engineering to hearing aids and virtual reality. Understanding how the human auditory system perceives and responds to sound is crucial for designing and optimizing audio technologies that enhance the listener's experience.

In music production and audio engineering, psychoacoustics plays a vital role in creating immersive and emotionally engaging soundscapes. Sound engineers and producers apply their knowledge of pitch, timbre, loudness, and spatial hearing to craft audio mixes that are balanced, clear, and impactful. They use techniques such as equalization, compression, and reverb to shape the spectral and temporal characteristics of sound, ensuring that each element of the mix is audible and contributes to the desired emotional response.

Psychoacoustics also guides the design of concert halls, recording studios, and audio equipment to optimize sound quality and listener experience. Acoustic engineers consider factors such as reverberation time, early reflections, and frequency response when designing spaces for music performance and recording. They aim to create environments that enhance the clarity, spaciousness, and intimacy of the sound while minimizing unwanted reflections and resonances.

Moreover, psychoacoustics has significant implications for noise control and environmental acoustics. Understanding how the human auditory system perceives and responds to different types of noise can inform the design of quieter and more acoustically pleasant environments. This knowledge is valuable in urban planning, transportation, and architectural acoustics, where reducing noise pollution and enhancing acoustic comfort are key objectives.

Psychoacoustics also plays a crucial role in the development of virtual reality and immersive audio experiences. By leveraging principles such as sound localization, spatial hearing, and binaural audio, designers can create virtual environments that closely mimic real-world auditory perceptions. This has applications in gaming, simulation training, and virtual tourism, where realistic and spatially accurate sound is essential for creating a sense of presence and immersion.

In the field of hearing aids and assistive listening devices, psychoacoustics is essential for improving speech intelligibility and overall sound quality for individuals with hearing impairments. By understanding the specific perceptual challenges faced by people with hearing loss, such as reduced frequency resolution and increased susceptibility to masking, hearing aid designers can develop algorithms and signal processing techniques that enhance the audibility and clarity of speech while reducing background noise.

In the realm of subliminal audio, psychoacoustics offers valuable insights into how the brain processes and responds to different sound frequencies, intensities, and patterns. By carefully crafting subliminal messages and embedding them in audio content, it is possible to communicate with the subconscious mind and influence thoughts, beliefs, and behaviors. Psychoacoustic principles can be applied to optimize the effectiveness of subliminal audio, ensuring that the messages are delivered in a way that maximizes their impact while remaining below the threshold of conscious perception.

Conclusion

Psychoacoustics is a captivating field that unravels the mysteries of how our brains perceive and interpret sound. From pitch perception and timbre to loudness and auditory scene analysis, psychoacoustics sheds light on the intricate mechanisms that shape our auditory experiences. By understanding these principles, we can harness the power of sound to create immersive and effective experiences, whether in music, audio engineering, or subliminal audio.

What is Psychoacoustics?

Psychoacoustics is an interdisciplinary field that combines elements of psychology, acoustics, electronic engineering, physics, biology, physiology, and computer science. It focuses on understanding how the human brain perceives, processes, and interprets sound. By studying the relationship between the physical properties of sound and the subjective experiences they evoke, psychoacoustics aims to unravel the mysteries of auditory perception.

Psychophysics and Psychoacoustics

Psychoacoustics is a branch of psychophysics, which quantitatively investigates the relationship between physical stimuli and the sensations and perceptions they produce. In the context of psychoacoustics, this involves studying how the human auditory system perceives various sounds, including noise, speech, and music. By manipulating the physical properties of sound stimuli and measuring the corresponding psychological responses, psychoacoustics seeks to establish quantitative relationships between the two.

Psychophysical methods, such as threshold detection, magnitude estimation, and discrimination tasks, are commonly employed in psychoacoustic research. These methods allow researchers to quantify the limits of auditory perception, determine the just-noticeable differences between sounds, and investigate the subjective scales of perceptual attributes like loudness and pitch. By applying rigorous experimental designs and statistical analyses, psychoacoustics aims to develop mathematical models and theories that accurately describe the relationship between physical sound stimuli and their corresponding perceptual experiences.

Here is a highly recommended crash course in psychoacoustics, from the peripheral auditory system and masking to sound quality:

The Physiology of Hearing

To grasp the foundations of psychoacoustics, it's essential to understand the physiology of hearing. When sound waves enter our ears, they travel through the auditory canal and cause the eardrum to vibrate. These vibrations are then transmitted to the middle ear, where three tiny bones – the malleus, incus, and stapes – amplify and transmit the vibrations to the inner ear.

The inner ear, specifically the cochlea, plays a crucial role in the perception of sound. The cochlea is a fluid-filled, spiral-shaped structure lined with thousands of tiny hair cells. These hair cells are arranged in a frequency-specific manner, with high-frequency sounds stimulating hair cells at the base of the cochlea and low-frequency sounds stimulating hair cells at the apex. This tonotopic organization allows the brain to differentiate between different frequencies and perceive pitch.

As sound vibrations travel through the cochlear fluid, they cause the hair cells to bend and generate electrical signals. These signals are then transmitted to the auditory nerve, which carries the information to the auditory cortex in the brain for further processing and interpretation. The brain's complex neural networks analyze the incoming auditory information, extracting relevant features such as pitch, timbre, and spatial location, and integrating them into a coherent perceptual experience.

Pitch Perception

One of the fundamental aspects of psychoacoustics is pitch perception. Pitch is the subjective sensation of a sound's frequency, allowing us to distinguish between high and low tones. The brain's ability to perceive pitch is remarkably precise, enabling us to detect subtle variations in frequency. However, the perception of pitch is not solely dependent on the physical frequency of a sound but also influenced by factors such as loudness, duration, and the presence of harmonics.

The perception of pitch is closely tied to the concept of critical bands. Critical bands refer to the frequency ranges within which the ear's frequency-analyzing mechanism operates. The human auditory system divides the frequency spectrum into approximately 24 critical bands, each spanning about one-third of an octave. Within each critical band, the ear integrates the energy of the sound, and this integration influences pitch perception. Sounds with frequencies falling within the same critical band are more likely to be perceived as having similar pitches.

Pitch perception also involves the phenomenon of virtual pitch, where the brain perceives a pitch that is not physically present in the sound stimulus. This occurs when the brain extracts the fundamental frequency from a complex sound containing harmonics. Even if the fundamental frequency is missing, the brain can still perceive it based on the pattern of the harmonics. This explains why we can hear the pitch of a telephone dial tone or a bass guitar, even though the fundamental frequency may be too low to be directly perceived.

Timbre and Sound Quality

Timbre, often described as the "color" or "texture" of sound, is another fascinating aspect of psychoacoustics. It refers to the unique character of a sound that distinguishes it from others with the same pitch and loudness. Timbre is what allows us to differentiate between different musical instruments, voices, or even emotions conveyed through speech. The perception of timbre is influenced by the harmonics, attack, decay, and other spectral and temporal characteristics of a sound.

The perception of timbre is closely related to the concept of formants. Formants are the resonant frequencies of a sound source, such as the human vocal tract or a musical instrument. These resonances shape the overall spectrum of the sound and contribute to its unique timbre. The relative strengths and positions of formants play a significant role in the perception of vowel sounds in speech and the characteristic timbres of different musical instruments.

Timbre perception is a complex process that involves the brain's analysis of the spectral and temporal features of a sound. The brain extracts information about the relative amplitudes and phases of the harmonics, as well as the attack, sustain, and decay characteristics of the sound. It then integrates this information to form a coherent perception of timbre. Interestingly, the perception of timbre can be influenced by factors such as the listener's cultural background, musical training, and emotional state.

Loudness and Dynamic Range

Loudness is another crucial element in psychoacoustics, referring to the subjective perception of a sound's intensity. Our brains have an incredible ability to process a wide range of loudness levels, from the faintest whisper to the roar of a jet engine. This is known as the dynamic range of hearing. However, the perception of loudness is not linear; a doubling of sound intensity does not necessarily result in a doubling of perceived loudness. Instead, the relationship between physical intensity and perceived loudness follows a logarithmic scale, known as the decibel scale.

The perception of loudness is influenced by various factors, including frequency, duration, and the presence of other sounds. The human auditory system is most sensitive to frequencies in the range of 2-5 kHz, which corresponds to the frequency range of human speech. Sounds in this frequency range are perceived as louder compared to sounds of equal intensity at other frequencies. Additionally, the duration of a sound affects its perceived loudness; sounds with longer durations are generally perceived as louder than shorter sounds of the same intensity.

Loudness perception is also subject to the phenomenon of loudness adaptation. When exposed to a constant sound for an extended period, the perceived loudness gradually decreases over time. This is why a loud noise that initially seems unbearable can become more tolerable after prolonged exposure. Conversely, the absence of sound can lead to an increased sensitivity to subsequent sounds, a phenomenon known as auditory deprivation.

Masking and Auditory Scene Analysis

Psychoacoustics also delves into the phenomenon of masking, which occurs when one sound obscures or "masks" another. This is particularly relevant in noisy environments where background sounds can interfere with our ability to perceive specific sounds of interest. Masking can occur in both the frequency and time domains. Frequency masking happens when a sound in one frequency range makes it difficult to perceive a sound in a nearby frequency range. Temporal masking occurs when a loud sound makes it challenging to perceive a softer sound that occurs shortly before or after it.

The human auditory system has evolved to cope with masking effects through a process called auditory scene analysis. This refers to the brain's ability to separate and analyze complex auditory scenes, allowing us to focus on a particular sound source while filtering out irrelevant background noise. Auditory scene analysis involves grouping sound elements that belong to the same source and segregating them from other sources. This process relies on various cues, such as spatial location, pitch, timbre, and temporal patterns, to organize the auditory input into meaningful perceptual units.

One of the most well-known examples of auditory scene analysis is the "cocktail party effect." In a noisy social gathering, the brain can selectively attend to a particular conversation while filtering out the competing background chatter. This is achieved through a combination of spatial, spectral, and temporal cues that allow the brain to separate the target speech from the interfering noise. Researchers have studied the cocktail party effect extensively to understand the mechanisms underlying auditory scene analysis and to develop algorithms for speech separation and enhancement in challenging acoustic environments.

Spatial Hearing and Sound Localization

Spatial hearing, or the ability to localize sound sources in three-dimensional space, is another important aspect of psychoacoustics. The human auditory system uses a variety of cues to determine the location of a sound source, including interaural time differences (ITDs), interaural level differences (ILDs), and spectral cues.

ITDs refer to the differences in the arrival time of a sound at the two ears. Since sound travels at a finite speed, a sound originating from the side will reach the closer ear slightly earlier than the farther ear. The brain uses these tiny time differences, on the order of microseconds, to estimate the horizontal position of the sound source. ILDs, on the other hand, refer to the differences in the intensity or loudness of a sound at the two ears. A sound coming from the side will be louder in the closer ear due to the acoustic shadowing effect of the head. The brain combines ITD and ILD cues to determine the azimuth (horizontal angle) of a sound source.

Spectral cues, which arise from the interaction of sound waves with the outer ear (pinna) and the head, provide information about the elevation and front-back location of a sound source. As sound waves enter the ear canal, they are filtered and modified by the complex geometry of the pinna. These spectral modifications vary depending on the direction of the sound source and serve as cues for vertical localization. Additionally, the brain uses head movements to resolve front-back ambiguities and improve localization accuracy.

The ability to localize sounds is crucial for navigating and interacting with the environment. It allows us to orient ourselves towards sound sources of interest, avoid potential dangers, and engage in effective communication. Spatial hearing also plays a significant role in the perception of music and the creation of immersive audio experiences. Techniques such as binaural recording and 3D audio simulation leverage the principles of spatial hearing to create realistic and engaging soundscapes.

Ultrasonic Hearing and the Hypersonic Effect

One of the most intriguing aspects of psychoacoustics is ultrasonic hearing and the hypersonic effect. Ultrasonic hearing refers to the human ability to perceive sounds at frequencies higher than the typical upper limit of human hearing, which is around 20 kHz. While it may seem counterintuitive, research has shown that humans can indeed detect and respond to ultrasonic frequencies under certain conditions.

The mechanism behind ultrasonic hearing is still a topic of debate among researchers. One theory suggests that ultrasonic sounds stimulate the inner hair cells located at the base of the cochlea, which are sensitive to high-frequency sounds. Another theory proposes that ultrasonic signals resonate within the brain and are then modulated down to frequencies that the cochlea can detect.

Fascinating studies have demonstrated the potential of ultrasonic hearing. For example, by modulating speech signals onto an ultrasonic carrier, researchers have achieved intelligible speech perception with remarkable clarity, particularly in environments with high ambient noise. The hypersonic effect, a term coined by researchers Tsutomu Oohashi and colleagues, refers to the controversial idea that the presence or absence of ultrasonic frequencies in music can influence human physiological and psychological responses, even when these frequencies are not consciously perceived. Their study found that subjects showed enhanced alpha-wave brain activity and expressed a preference for music containing high-frequency components above 25 kHz, despite not being able to consciously distinguish between the presence or absence of these frequencies.

Applications of Psychoacoustics

The principles of psychoacoustics find numerous applications in various fields, ranging from music and audio engineering to hearing aids and virtual reality. Understanding how the human auditory system perceives and responds to sound is crucial for designing and optimizing audio technologies that enhance the listener's experience.

In music production and audio engineering, psychoacoustics plays a vital role in creating immersive and emotionally engaging soundscapes. Sound engineers and producers apply their knowledge of pitch, timbre, loudness, and spatial hearing to craft audio mixes that are balanced, clear, and impactful. They use techniques such as equalization, compression, and reverb to shape the spectral and temporal characteristics of sound, ensuring that each element of the mix is audible and contributes to the desired emotional response.

Psychoacoustics also guides the design of concert halls, recording studios, and audio equipment to optimize sound quality and listener experience. Acoustic engineers consider factors such as reverberation time, early reflections, and frequency response when designing spaces for music performance and recording. They aim to create environments that enhance the clarity, spaciousness, and intimacy of the sound while minimizing unwanted reflections and resonances.

Moreover, psychoacoustics has significant implications for noise control and environmental acoustics. Understanding how the human auditory system perceives and responds to different types of noise can inform the design of quieter and more acoustically pleasant environments. This knowledge is valuable in urban planning, transportation, and architectural acoustics, where reducing noise pollution and enhancing acoustic comfort are key objectives.

Psychoacoustics also plays a crucial role in the development of virtual reality and immersive audio experiences. By leveraging principles such as sound localization, spatial hearing, and binaural audio, designers can create virtual environments that closely mimic real-world auditory perceptions. This has applications in gaming, simulation training, and virtual tourism, where realistic and spatially accurate sound is essential for creating a sense of presence and immersion.

In the field of hearing aids and assistive listening devices, psychoacoustics is essential for improving speech intelligibility and overall sound quality for individuals with hearing impairments. By understanding the specific perceptual challenges faced by people with hearing loss, such as reduced frequency resolution and increased susceptibility to masking, hearing aid designers can develop algorithms and signal processing techniques that enhance the audibility and clarity of speech while reducing background noise.

In the realm of subliminal audio, psychoacoustics offers valuable insights into how the brain processes and responds to different sound frequencies, intensities, and patterns. By carefully crafting subliminal messages and embedding them in audio content, it is possible to communicate with the subconscious mind and influence thoughts, beliefs, and behaviors. Psychoacoustic principles can be applied to optimize the effectiveness of subliminal audio, ensuring that the messages are delivered in a way that maximizes their impact while remaining below the threshold of conscious perception.

Conclusion

Psychoacoustics is a captivating field that unravels the mysteries of how our brains perceive and interpret sound. From pitch perception and timbre to loudness and auditory scene analysis, psychoacoustics sheds light on the intricate mechanisms that shape our auditory experiences. By understanding these principles, we can harness the power of sound to create immersive and effective experiences, whether in music, audio engineering, or subliminal audio.